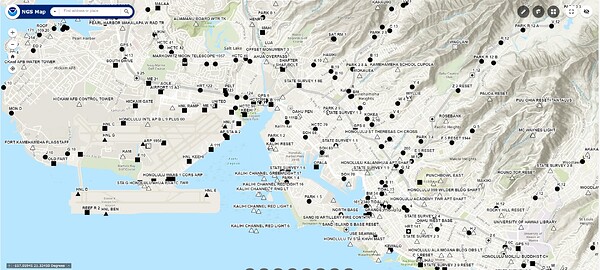

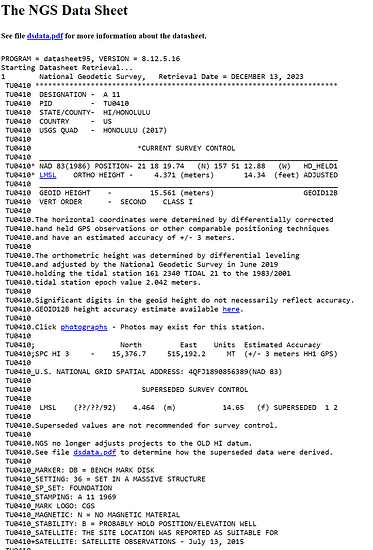

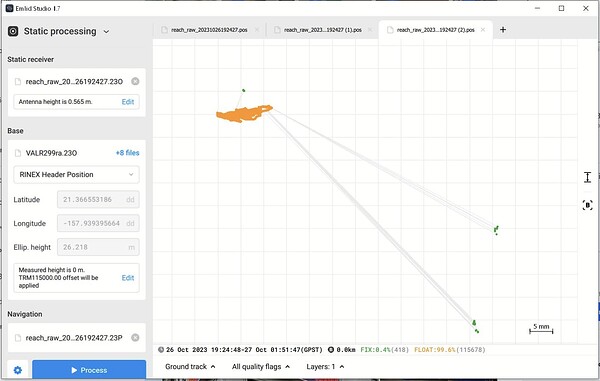

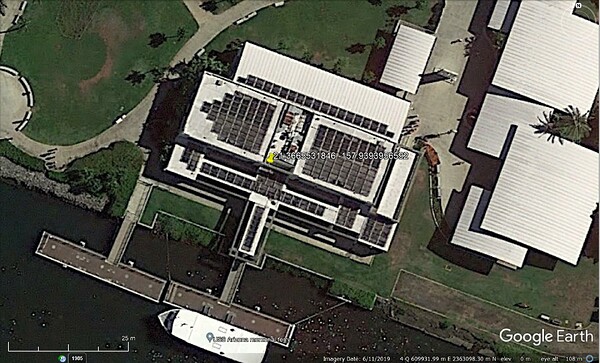

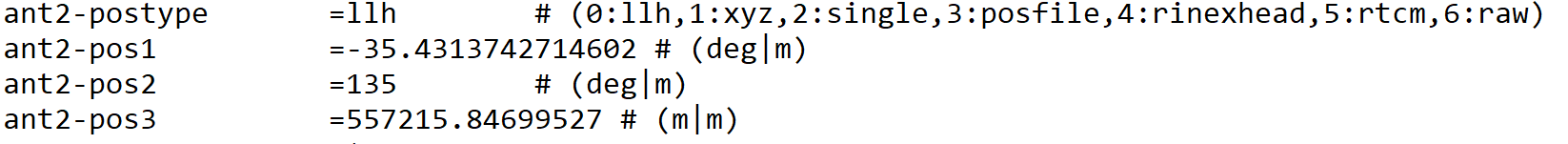

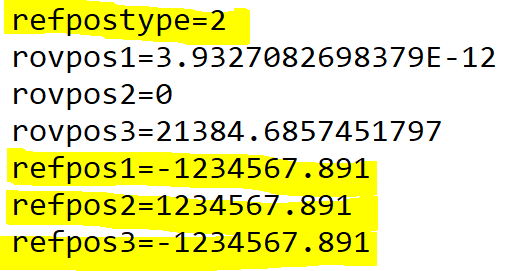

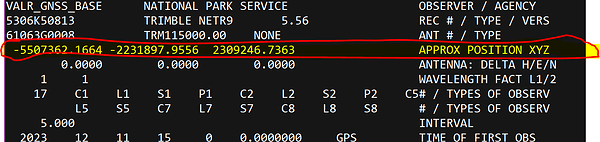

Hello, I’d like to make a feature request. I recently got an RS+ to create ground control points for drone photogrammetry. I’m using PPK with a base station here on O’ahu, the Pearl Harbor VALR station run by the National Park Service.

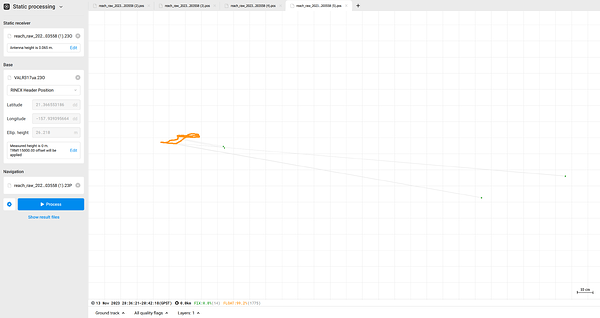

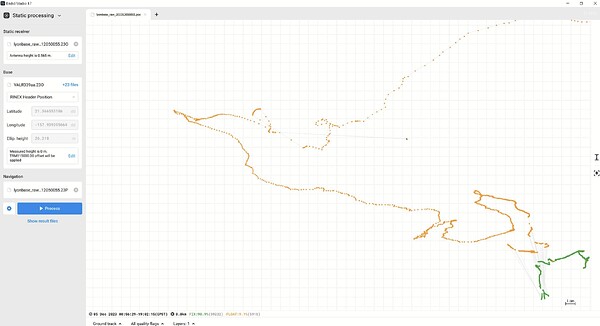

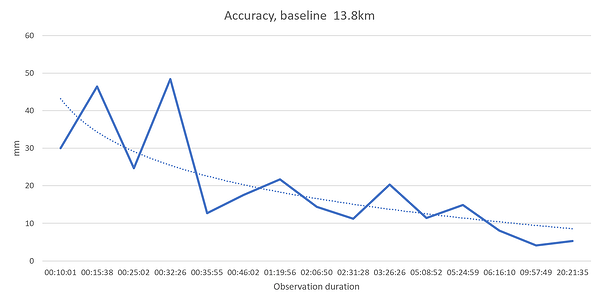

My workflow doesn’t involve creating projects at all, I’ve found it’s a lot easier to just turn on logging for each point and then turn it off. Usually I let it collect data for 5 to 15 minutes, or longer if I have time. So I do static processing. I view both the “multiple solution” and “single solution”. It would be great if this type of workflow was more functional so I have some specific requests that I think would open up this kind of data collection to more people. The first is low hanging fruit:

-

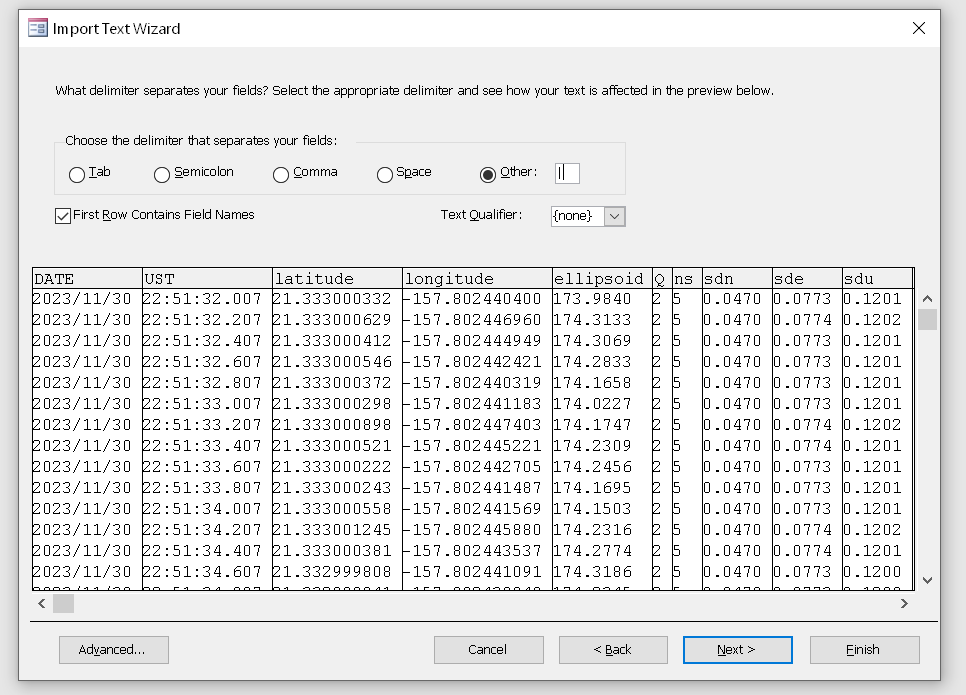

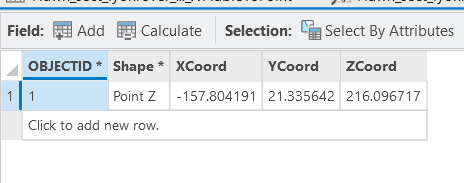

Allow exporting the multiple solution and single solution as a shapefile. Currently I have to copy and paste the coordinates from Emlid Studio, or carefully convert the results file to CSV to import to ArcGIS Pro.

-

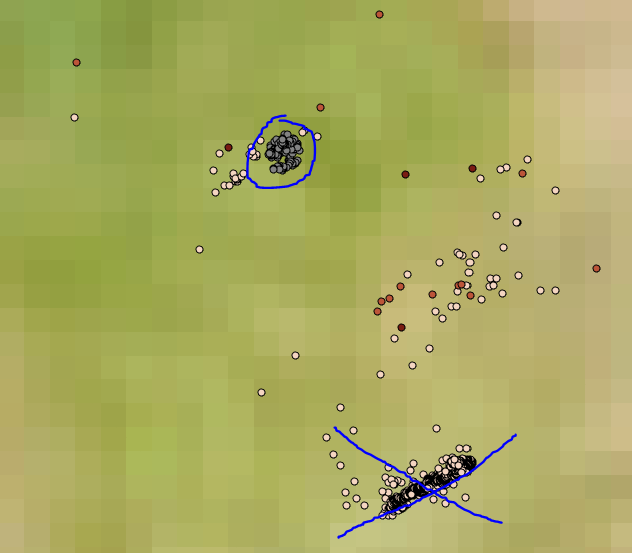

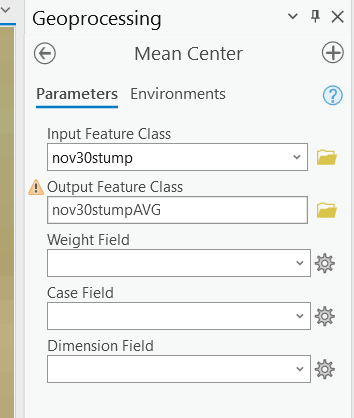

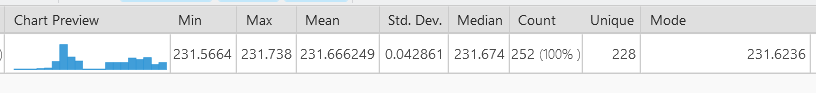

Allow solution points to be removed in Emlid Studio. Often I get a sequence of orange points showing “float” solution, and a few far flung outliers that the software considers “fix”. These fix points seem to get used when you do a single solution. So have to convert the multiple solutions to CSV and then do your averaging in GIS software. If the outliers could be deleted or excluded, that would make it much easier.

-

Let us select a set of points in the map view and use those to create a point. A timeline view would also be very helpful. That would enable just walking with the device and selecting the points where you’ve stopped, then using the timeline to narrow it down further to when you know for sure the Reach was stationary. Then the resulting set of points could be exported to a shapefile.

-

This is kind of an alternative to 3. Allow selecting a set of points and exporting just those to a shapefile. That would let you create a shapefile for each set of points you want to average, then do the averaging in GIS software.

-

Allow choosing different height datums for your results. In Hawai’i, Mean Sea Level is the most useful geoide. Ellipsoid isn’t very useful because we’re about 16 feet above the ellipsoid but of course it varies by location. Most analysis here involves MSL, particularly due to sea level rise concerns. The standard for LiDAR and photogrammetry here is MSL. Having to convert every height individually takes a lot of labor!

-

Allow choosing different coordinate systems for your results. This one is less important to me because I use WGS84. But for many users, I’m sure they would prefer a local coordinate system.

I think my suggestions would make an alternative PPK workflow possible for a lot of people, even if they haven’t even considered this. I’ve learned from the Facebook group that very few users are doing PPK, and most don’t even know what it is. So I think better PPK would also open up Emlid hardware to more potential customers who have access to a public base station and can average points for longer periods, vs relying on RTK.