I am just now getting into PPK on my Phantom 4 Pro using the kit I got from @Brian_Christal at TuffWing. He has a very nice step by step on processing the Reach RTK or Reach M+ using RTKlib.

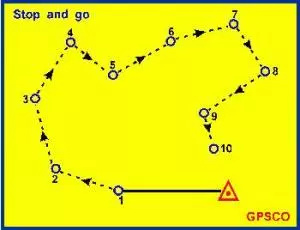

So looking at this from a processing point of view, how does processing the Camera Events that are triggered on the M+ drone flight differ from processing a stop and go survey on the ground??

Using the Reach M+ o the ground would be much more difficult due to signal strength, at least that is what I found with the Reach RTK original. But when the unit is in the air 200-300’ agl then it is a none issue. The Reach RS does not have the option of Camera Events but I am just thinking outside the box. It seems to me that the Reach M+ is a Stop and Go survey in the sky (hmmm. maybe just a “go survey” since there is no stopping!)

So why can’t ground surveys be processed exactly the same way that drone flights are processed using the Reach M+ module?

If the Reach Rs supported camera events, could a camera not be put on a pole and take a picture to trigger the survey point (sort of like @TB_RTK mounted the camera to view the bottom point of his latest dual head super cool setup!). And actually, my question is not so much about using a camera to create an event (not sure how practical that would be anyway) but more about why is processing a stop and go survey in RTKLib not the same as processing a drone flight using the M+? (or is it)

Thanks for any thoughts and insight

.

.

I will play around with some tests just to see how stuff compares. Take care TB!

I will play around with some tests just to see how stuff compares. Take care TB!