For others stumbling into this thread I too am in a similar situation currently. I have conducted a number of surveys now with our Emlid Reach RS+ units and am still establishing a practical workflow, site dependent. My first project was over a month ago and involved collecting GCPs to georeference a sUAS flight. I am just now getting around to PPK my solutions. I will describe what I have found in my journey as I have reached a similar position today.

I showed up to the site with two Emlid Reach RS+ in tow, one as my base and the other me rover. I first searched for a nearby NGS marker I found on the NGS website but no dice never found it. No way to establish an absolute base coordinate that day. Oh well I say, PPK it is. NTRIP was not an option yet although a nearby CORS site existed emitting corrections over the web. I was awaiting my login credentials and now have access but all my possible NTRIP correction sources are in NAD83 in the U.S., California for me. But that’s another story for another time. I am working on a “solution/workaround” here though as well but back to my field day.

I first setup my base station in an optimal location with wide open sky and immediately begin logging after dialing in my settings per documentation. Once satisfied, I begin averaging a proxy base location for 30 minutes IIRC. Once coordinates were collected for base I saved manually. Looking back I should have written this number down, its good to have, but can be dug up later as well. Once base was established I fired up my rover and matched settings to that of the base and set my LoRa connection between the two. LoRa did great, had fix most of the day even running between tall pines and dense thickets of willow. I collected my GCPs, twelve in total, and exported my survey project out of the Reach app. Yay, now I’m done but I have at least a meter of error in all my points (absolute). So here I am sitting at my workstation needing to correct my controls to drop into my photogrammetry software.

I download all necessary files from the closest CORS station to my project site to process in RTKPost. As I said earlier the CORS site here is referenced to NAD83 but I need WGS 84 for my reach logs. I find the coordinate of the base station in the provided .coord file. I then use this tool from NGS/NOAA that has already been posted quite extensively on this site to convert from NAD83 into WGS84 also taking into account the movement of the plates.

NGS/NOAA - HDTP

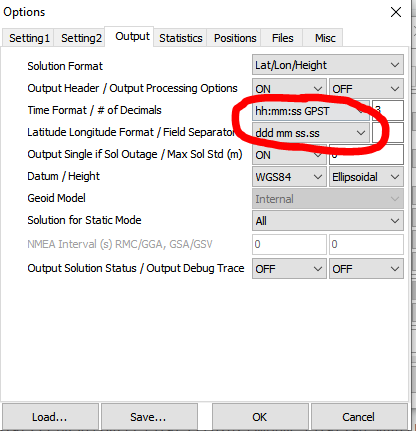

I then use my newly transformed coordinate in RTKPost options for manually entering the base station location for my chosen CORS site. Pay attention to positive and negative values (lets say I accidentally dropped a negative sign and couldn’t figure out why my solutions were all Q=5…). Anyway, fixed that and ran my Reach Base logs as rover to the CORS site I chose as base. Got quality fix Q=1 and then filtered by time interval only my Q=1 solutions. Then used statistics in RTKPost to extract an average of my desired points and alas, new base coordinate for my Reach unit! I took this coordinate and plotted in GIS.

My original thought was this, if I knew my “original” base location collected from my 30 minute average and I then had a “corrected” base position derived from PPK could I not apply a uniform shift to all my surveyed rover points by the same magnitude of the difference between “original” and “corrected” position in GIS? Intuitively, this makes sense to me because its all relative (base and rover) but my first run did not seem to match when compared to my corrected Rover positons. I am still working through this technique because I am not convinced I can’t do it this way and would in fact potentially by applicable to my NAD83 NTRIP issue as well…

I then took my raw rover logs into RTKPost and corrected to my Emlid Base logs with the new PPK derived coordinate and ran it. I achieved good results here just as the base did. So here is where I am at currently, after I PPK both base and rover logs I convert in RTKPost their respective position file into a GPX track that drops right into GIS software. QGIS drops right in but have to import in ArcMap.

With GPX in GIS I was able to use some tools I am familiar. This is what my base GPX track looks like in GIS with the average plotted (white dot).

This is what my rover track then looks like in GIS.

From the rover track I was able to focus on the areas where I had longer occupation time (my GCPs). I filtered and queried only the positions I was concerned with for my GCP. I could tell where a longer occupation time occurred due to “clustering” of points. I simply selected out the points of interest out of the rover track into a new individual file per GCP. I have not done this yet but my plan was to run statistics to arrive at an average coordinate and then plot.

Well, I can say this wasn’t terrible but it definitely wasn’t as automated as I would like. If you have lots of points you are going to have lots of fun without scripting this task somehow. However, I still think the best solution would be to apply a transformation to the original base coordinate from PPK solution and take your rover points and apply the exact same transformation. But when I tried this at first after applying a shift my points didn’t land on the corrected rover cluster. Does this workflow seem reasonable? Where could I have introduced error in terms of a transformation? As I said before I did not write down the average base coordinate. I thought I found it in the Rinex header of my position file when ran RTKPost on Reach Rover and Reach Base prior to inputting corrected base coordinate…

EDIT: This is the tool and workflow I will be using in QGIS

Averaging Points in QGIS