Ok, that is bad, its the time where everyone distributes banana products… . I did run out of time for a proper testing, please excuse this “Rohrkrepierer”. Thank you for your help and I hope you are ready to try again!

Program starts up in a window too small to see selection buttons on the right hand side. This could be my windows 10 scaling. i don’t know if you can overcome this and make it suitable for all screen scales.

I have to figure out how to solve that in an elegant way. I start with a far to large window for now. I hope that helps.

if you are writing this for wider consumption i would add the options of listing images by .JPG and .DNG at least.

ok, I added some file formats

you are outputting tab delimited files. i would make it extension .TXT and call them tab delimited text instead of .CSV. i didn’t see any commas.

you are right, that is because Photoscan does not import *.tab files and one could read csv as character separated values. I have changed the output file format to *.txt.

i used your program and told it to calculate a time offset of 0ms to make sure it gave me the same values back. it did not. it seemed to calculate random offsets that would correspond to random values between 5ms and 300ms. floating point precision problem? i tried 10000ms offset and am getting 400 metres position offsets. and they are off track. i should only be seeing 50m. and they should appear somewhere on the original track.

I think I found part of the error which I introduced by adapting my correction of the antenna offset to calculate the time offset. I think I have now boiled it down to a floating point precision problem (some mm difference).

Comment/edit: I have realized that it is not a floating point precision error but it is an error which is caused by the fact that the time mark time information is rounded in the pos file. While it is 2018 11 10 11 32 37.5185379 in the observation file, its 2018 11 10 11 32 37.519 in the pos file. That is where the error comes from. @wsurvey can you replicate that error in your spread sheet?

When using the dd.dddddd WGS84 coordinate system the problem results in an error of about 3 mm when entering 0 ms as time offset:

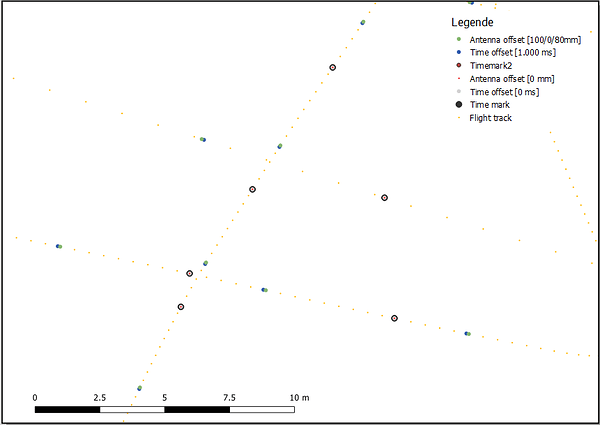

You might have notice a different distance for the 1.000ms time offset. This is due to different flight speeds (~6 m/s and ~2 m/s). Flight direction can be seen looking at the antenna offset, this green point is always in fight direction after the blue time offset point. The image interval was about 2.800 to 3.000ms. The logging frequency was 5 Hz, so the distance between each yellow point represents 200 ms (-> flight speed).

For XYZ ECEF coordinates the error is about 12 mm due to the larger numbers we are dealing with.

If you or someone is ready to make another test eventhough the floating point precision problem still exists you can download the updatet version here: downlink.

Unfortunately the marked situation in north-east Germany is very challenging. I also realize that the aeras of application are limited in Germany, especially for me since I’m an utilitarian and I’m not ready to contribute to a lot of activities such as building of animal farms and climate change driving things… .

Unfortunately the marked situation in north-east Germany is very challenging. I also realize that the aeras of application are limited in Germany, especially for me since I’m an utilitarian and I’m not ready to contribute to a lot of activities such as building of animal farms and climate change driving things… .